Welcome to VQGAN implementation in JAX/Flax

This site contains the project documentation for the jax-vqgan.

What you need to know

JAX (Just After eXecution) is a recent machine/deep learning library developed by DeepMind and Google. Unlike Tensorflow, JAX is not an official Google product and is used for research purposes. The use of JAX is growing among the research community due to some really cool features. Additionally, the need to learn new syntax to use JAX is reduced by its NumPy-like syntax.

Flax is a high-performance neural network library for JAX that is designed for flexibility: Try new forms of training by forking an example and by modifying the training loop, not by adding features to a framework.

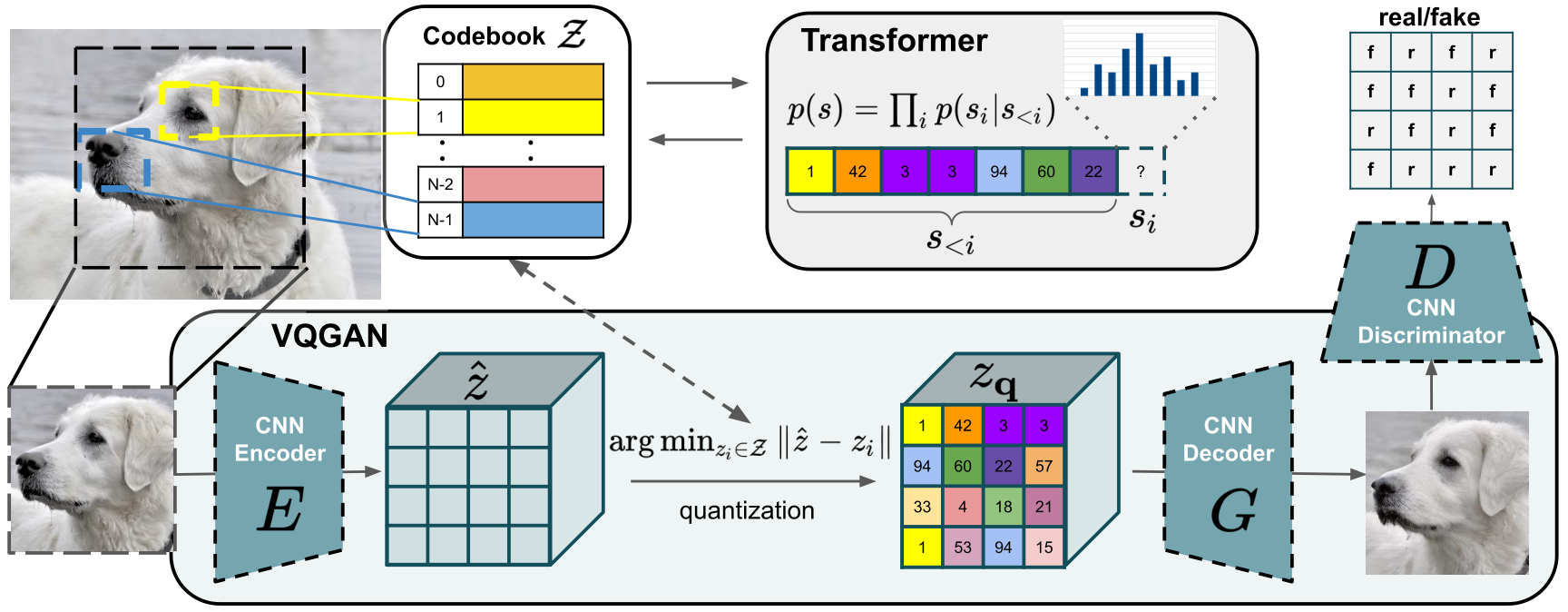

VQGAN (Vector Quantized Generative Adversarial Network): VQGAN is a GAN architecture which can be used to learn and generate novel images based on previously seen data. It was first introduced for the paper Taming Transformers (2021). It works by first having image data directly input to a GAN to encode the feature map of the visual parts of the images. This image data is then vector quantized: a form of signal processing which encodes groupings of vectors into clusters accessible by a representative vector marking the centroid called a “codeword.” Once encoded, the vector quantized data is recorded as a dictionary of codewords, also known as a codebook. The codebook acts as an intermediate representation of the image data, which is then input as a sequence to a transformer. The transformer is then trained to model the composition of these encoded sequences as high resolution images as a generator.

This project basicly provide implementation of VQGAN in JAX/Flax with Trainer module, dataset loading and Tensorboard logging. We will try to add in future ability to also ship model into Hugging Face. This is my one of the first well done project so if you have some advices how to improve or you see some problem please make issues or make pull requests 😊(evil smile face).

Table Of Contents

The documentation follows the best practice for project documentation as described by Daniele Procida in the Diátaxis documentation framework and consists of four separate parts and changelog:

Acknowledgements

I want to thank me, myself and I 🥸. But honestly I am thankful for the Taming Transformers authors for this marvelous architecture and this repo from which I based my implementation "stole some code one can say" 👤💰.

FURTHERMORE YOU MUST WATCH THIS TIKTOK USER

AND LASTLY I advise to listen to this song as this song was my coding song for this project. Click on Captain Cat Sparrow made by DALLE 2 to listen it 🎧.